5 questions to ask your incrementality-test provider before launching an experiment

Before running an incrementality test, it’s worth checking that the design, execution, and metrics will give you results you can trust. These five questions help you understand whether your provider can deliver lift estimates that are both methodologically sound and genuinely useful for decision-making.

.png)

Incrementality testing has become a cornerstone of modern marketing measurement for many scaled brands.

Done well, it offers valuable causal validation that helps teams understand what’s truly driving incremental growth.

A large share of failed tests don’t fall down on the maths - they fail earlier, in the design, execution, or interpretation.

Before investing time and budget, it’s worth asking these five questions to ensure your incrementality partner can deliver insights that are both statistically sound and actionable.

Question 1: How do you prevent contamination and external factors from biasing results?

Geo tests and holdouts can be powerful, but they are exposed to real‑world complexity.

People commute between regions, platforms sometimes deliver ads outside intended boundaries, and local events can influence outcomes.

To make results dependable, a good practice for your provider is to be transparent about how they identify and control for these effects.

What good looks like

Spillover and mobility awareness

Good practice is to minimize overlap between test and control, where feasible. Many advanced providers use mobility-aware geographies (for example, commuting-zone style groupings) and document expected and observed crossover, including an estimated cross‑exposure rate.

Monitoring local shocks

Robust programs monitor factors such as competitor promotions, stockouts, or local events that could skew performance, and pre‑define exclusion or adjustment rules.

Transparent counterfactual design

A well-run test clearly explains how control regions are selected and validated. Synthetic controls or pre-period fit analysis (i.e. checking that test and control behave similarly before launch) can improve comparability when pre‑period fit metrics are adequate, but what matters at least as much is a transparent rationale.

Red flags

1. Generic geo lists without evidence of spillover checks

2. No record of concurrent campaigns or local disruptions

3. No documentation of contamination diagnostics

If these controls aren’t documented, it’s safer to treat the reported lift as directional rather than definitive.

Question 2: What are your quality-control processes for execution?

In many failed tests, the issue isn’t statistical significance - it’s executional drift.

Incorrect dates, missing suppressions, or overlapping audiences can compromise validity before the first impression is even served.

What good looks like

Pre-flight checklist and sign-off

Audience definitions, spend caps, exclusions, and start dates are reviewed and approved before launch.

Runbook and event log

A timestamped log of campaign changes, creative swaps, outages, and promotions provides transparency and supports later diagnostics.

Guardrails against platform bleed

Ads don’t always respect test boundaries.

Account- or campaign-level exclusions, plus verification from delivery data, help reduce leakage.

Health checks and interim reviews

Reliable tests include variance checks and agreed thresholds for reruns or extensions if assumptions are broken.

Red flags

1. No written setup documentation

2. Overlapping tests within the same region or audience

3. Adjusting parameters mid-test without logging changes

4. Without operational discipline, analytics often can’t recover validity.

Question 3: What is the single causal hypothesis we’re testing, and what is our success metric?

Every robust test starts with a clear hypothesis and a metric that fits the question.

Without that, results can look convincing but offer little actionable insight.

What good looks like

A strong test defines one primary hypothesis and one primary success metric, supported by secondary metrics for context.

Examples:

Prospecting creative test

Hypothesis: Increasing prospecting spend in the US will lift new-customer acquisition.

Guardrail: Blended CAC should not materially worsen.

Primary metric: Incremental new-customer lift.

Secondary metrics: Branded search, assisted conversions.

YouTube awareness test

Hypothesis: Always-on YouTube reach is expected to improve awareness and consideration, with measurable downstream impact.

Primary metric: Brand-lift or awareness delta.

Secondary metrics: Delayed conversion lift, branded search activity.

The goal is metric-method fit:

Direct-response tests: incremental ROI or CAC.

Upper-funnel: new-customer lift, blended revenue change, or downstream halo.

Power analysis, duration planning, and stop rules are typically also defined upfront.

Red flags

1. Undefined or shifting hypotheses

2. Using iROAS as the sole KPI for awareness tests

3. No documentation of power or duration planning

When hypotheses and metrics are aligned, the resulting lift becomes meaningful evidence rather than noise.

Question 4: How much support do you provide for turning test outputs into business decisions?

Incrementality results are highly valuable, but they’re also snapshots.

They show how marketing performed under specific conditions, not necessarily where the next dollar should go tomorrow.

That’s where integration matters.

What good looks like

Calibration, not isolation

In more mature setups, lift results feed into an always-on measurement model that continuously refines forecasts and spend curves. This ensures test learnings remain relevant beyond the experiment window.

From test to guiding the next marginal dollar

The strongest programs help teams translate lift results into ongoing budget and channel decisions, so causal learnings actually influence day-to-day optimization.

Cross-stack triangulation

Test results are most powerful when interpreted alongside other sources, such as MMM trends and platform-level attribution, informing a single view for marketing and finance.

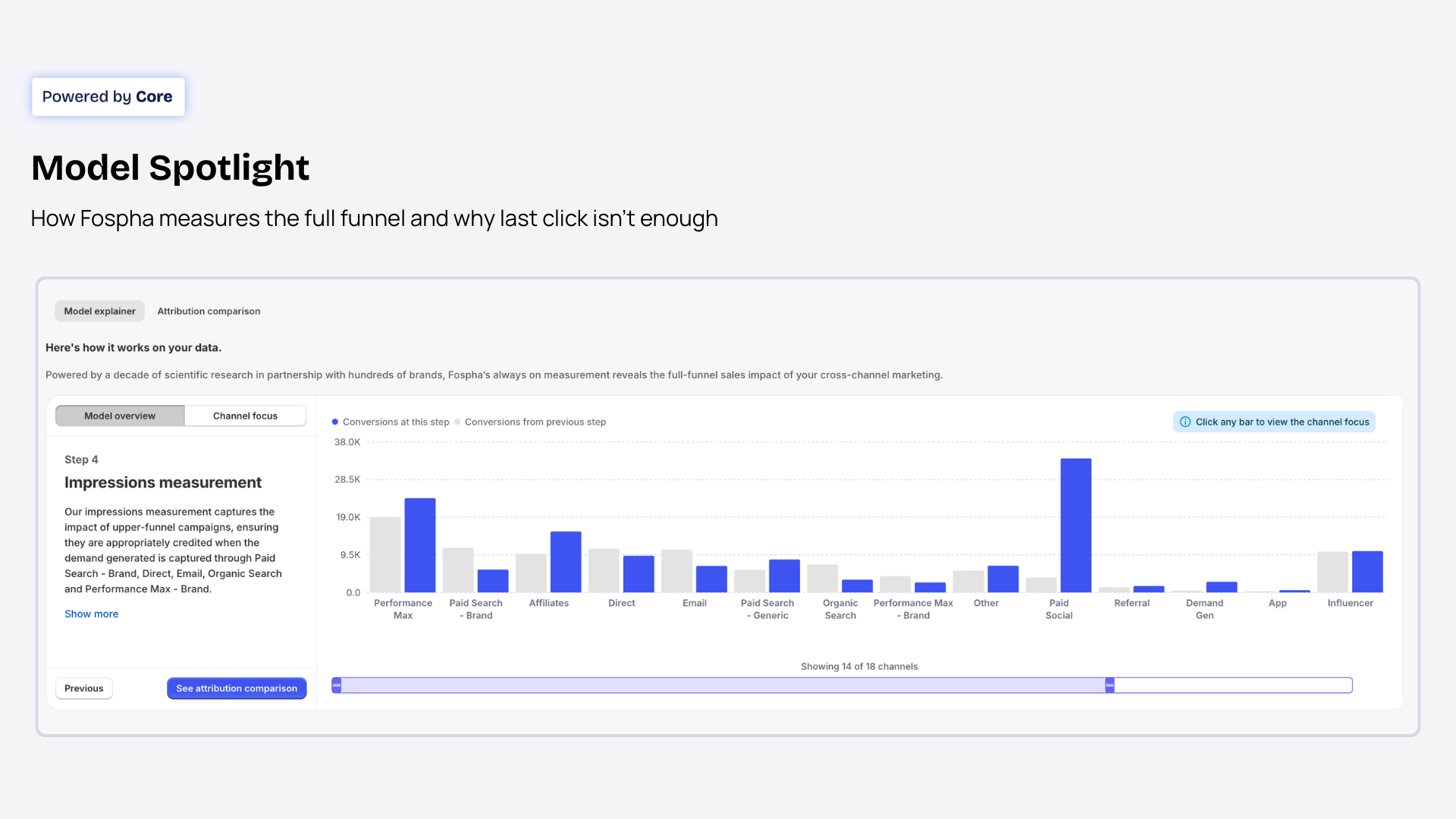

At Fospha, this is how we see the stack working: incrementality provides causal validation, while our Bayesian MMM is designed to provide always-on, full-funnel measurement that unifies DTC and marketplace data.

Red flags

1. Hand-over ends at “here’s your lift report”

2. No plan to integrate test learnings into always-on systems

3. Tests run in isolation with no link to broader marketing mix

A good test explains what worked; a good system helps you act on that insight going forward.

Question 5: How do you account for lag effects and cross-channel halo?

Campaign impact often extends beyond a test window or channel.

A Meta campaign might influence branded search or Amazon sales weeks later; a YouTube burst may drive consideration that converts elsewhere.

If those effects aren’t measured, the test can over- or under-estimate true performance.

What good looks like

Lag and read windows

Tests commonly allow sufficient time to capture delayed conversions or use decay models to adjust for adstock effects (how the impact of spend decays over time).

Cross-channel halo

Correlated movement across search, direct, and marketplace channels is measured where feasible, with controls in place to avoid double-counting.

Marketplace measurement

Where relevant, ensure the system links ads to downstream marketplace outcomes, not just on-site sales.

Brand-to-performance linkage

For awareness campaigns, connect brand-lift or sentiment data to later sales signals to show the full funnel impact.

This is central to Fospha’s full-funnel approach. Our Bayesian MMM is built to estimate lag and halo effects and update them over time. Where test results are available, we use them as an extra signal to refine those estimates and improve future predictions.

Red flags

1. Declaring results after very short test windows

2. Ignoring branded search or marketplace spillover

3. No mention of adstock or lag assumptions

When lag and halo are accounted for, test results become far more reflective of real business impact.

A practical buyer’s checklist

Ask your provider to share:

1. Geo design and contamination diagnostics

2. Pre-registered hypothesis, metrics, and test parameters

3. Runbook and event logs for transparency

4. Plan for integrating learnings into always-on measurement

5. Methodology for lag and halo effects

Quick warning signs

1. “Matched markets” without validation checks

2. iROAS used indiscriminately across campaign types

3. No documentation or overlapping tests

4. No integration path into your broader measurement system

The Fospha point of view

Incrementality testing is widely regarded as one of the most direct ways to validate cause and effect in marketing.

Fospha’s role is to make those insights usable every day.

With Fospha, marketers don’t have to choose between methodologies.

You can run rigorous experiments, validate causal impact, and measure performance daily, all within one unified operating system for growth.

Teams use this to justify budget shifts with finance and to focus spend where marginal profit improves.

We don’t replace your experiment stack or incrementality partners - we sit alongside them as the always-on layer that turns one-off test reads into daily, full-funnel guidance.

Stay ahead with the inside scoop from Fospha.

For over 10 years we've been leading the change in marketing measurement.